In the evolving landscape of virtual production, achieving efficient, real-time rendering is crucial. Creative technologist and stage manager Rune Sørgård searched for a solution that could deliver both inner and outer frustum rendering from Unreal Engine (UE) in a cost-efficient and performance-oriented manner.

“A huge benefit of using the OWL plugin is the ability to live-edit scenes within Unreal Engine without needing to enter Play In Editor mode. This enables real-time adjustments, which are immediately visible on the wall via Live FX. This feature drastically improves efficiency when working on complex virtual production setups.

For my work, I frequently use NDI to separate IBL (image-based-lighting) tasks onto a second machine, optimizing performance and flexibility. The OWL capture and NDI stream also remarkably retain post-process data, gamma, and color accuracy, which is not typical with traditional NDI streams.”

Rune Sørgård/ Studio Molkom

LEARN MORE ABOUT OFF WORLD LIVE LICENSES HERE>

What is Studio Molkom?

Studio Molkom, located at Molkoms Folkhögskola in Sweden, is a cutting-edge virtual production studio that empowers students to transform their creative visions into reality.

Students are supported to engage in every step of the filmmaking process—from writing scripts, to designing immersive virtual worlds, meticulously planning shots, and executing their ideas using state-of-the-art tools and techniques.

Rune Sørgård is a Senior Creative Technologist with a wealth of experience as a lighting programmer, stage manager, and educator. He specializes in Virtual Production, In-Camera Visual Effects (ICVFX), and Image-Based Lighting.

What is the Studio Molkom workflow?

The Off World Live Media Production Toolkit for Unreal Engine, in conjunction with Assimilate Live FX (LFX), offers an incredibly streamlined workflow that meets all my needs—particularly by eliminating the complexities of nDisplay, while still maintaining high-quality results.

What is Live FX?

Assimilate’s Live FX is a media server in a box. It can play back 2D and 2.5D content, including 360 and 180 media, at any resolution or frame rate to any sized LED wall from a single machine. It supports camera tracking, projection mapping, image-based lighting and much more.

Native implementation of Notch Blocks and the USD file format allow to use Live FX with 3D environments as well - but it doesn’t stop there! Through Live Link connection and GPU texture sharing, Live FX can also be combined with Unreal Engine and Unity.

Live FX’s extensive grading and compositing features enable users to quickly implement DoP feedback in real time and even allow for set extensions beyond the physical LED wall.

Why use Off World Live and Live FX in parallel?

Assimilate Live FX’s Projection Setup tools are incredibly flexible, allowing for easy integration and control of UE content without the usual hassle of configuring nDisplay.

Live FX provides full control over UE CineCams and handles camera tracking very well, which makes camera management straightforward. With OWL, one could send multiple live feeds using protocols like Spout or NDI, allowing for flexible and customizable setups that fit any project size.

GPU Texturing Streaming and Performance

One of the standout features for both OWL and LFX’s is their ability to leverage Spout and UE’s texture streaming functionality directly from the GPU. This bypasses the need for encoding or decoding video feeds, ensuring minimal latency, as the data is shared in real-time between applications.

With TextureShare and Spout, all the rendering work happens directly on the GPU, offering a zero-latency experience, and the OWL plugin makes this process even more powerful by allowing me to seamlessly get CineCam, Viewport, or Virtual Webcam feeds effortlessly from UE and OWL over to LFX.

Spout, as a texture-sharing method, enables multiple feeds to be sent to LFX without latency, as all the rendering happens directly on the same computer. This method works exceptionally well for high-performance setups, as there’s no video encoding required. The only limitation is that Spout requires both applications (UE and LFX) to run on the same machine and use the same GPU.

Alternatively, OWL also supports NDI, which can work across multiple systems but does introduce some latency due to the necessary video encoding and decoding processes. For smaller projects, everything can be managed on one machine with Spout, while for more complex productions, NDI allows for a distributed workflow, separating video and Image-Based Lighting (IBL) tasks across different machines.

Core Components

.png)

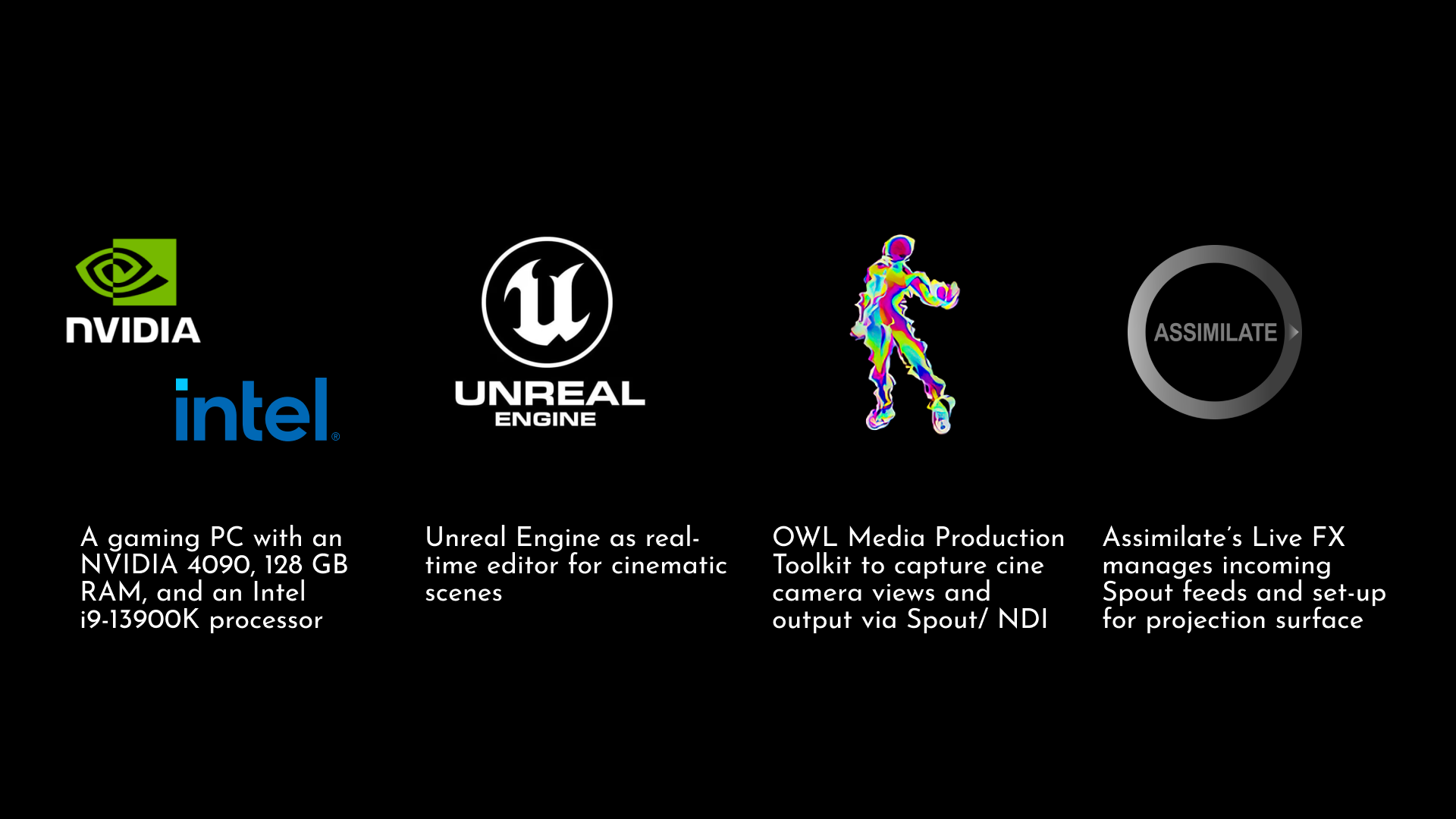

This workflow requires four main components:

-

PC: A gaming PC with an NVIDIA 4090, 128 GB RAM, and an Intel i9-13900K processor

-

Unreal Engine: Unreal Engine as real-time editor for cinematic scenes

-

OWL Media Production Toolkit: OWL Media Production Toolkit to capture cine camera views and output via Spout/ NDI

-

Assimilate Live FX: Assimilate’s Live FX manages incoming Spout feeds and set-up for projection surface

Technical Flow Diagram

In Unreal Engine (UE):

-

Use the Live Wizard in OWL to create a CineCam with Spout, naming it InnerFrustum.

-

Repeat the process for the OuterFrustum.

-

Use the LFX LiveLink Plugin to LiveLink your InnerFrustum OWL camera, allowing LiveFX to take control over the CineCam.

In Assimilate LiveFX (LFX):

-

Create a new project and import your two Spout feeds, naming them InnerFrustum and OuterFrustum respectively.

-

Set up a new projection, with the InnerFrustum and OuterFrustum feeds in the appropriate slots.

-

Ensure your stage projection surface is correctly configured.

With this setup, the InnerFrustum camera is controlled via a tracker, while the OuterFrustum camera for IBL remains independent of any camera movement. One may also opt to use an NDI camera for the OuterFrustum, which allows for the flexibility to send the signal to multiple systems.